Did a "DEI Programmer" Cause the Crowdstrike Disaster?

Almost certainly. But not in the way you're thinking.Adventures in C++!

Following the big CrowdStrike outage this week, the one that grounded global airline fleets, shuttered bank branches, and choked hospitals onto life support, people started hunting for scapegoats and causes. On X, I noticed a curious question trending:

"Did a DEI Programmer Cause the CrowdStrike Disaster?"

It was asked from several corners, by multiple users, with varying levels of seriousness, from dark-humored trolling to "get-a-rope"-style threats.

But a general feeling of aggravation was in the air, and outrage was spreading: after all, some affirmative-actioned Crowdstrike jigaboo had fat-fingered our global economy to a standstill! After a monthlong vacation to celebrate gay pride!

Could it be true?

Short answer: Of course not!

Longer answer: Well, probably, yes, but not in the way you're thinking. It has more to do with dumb technical decisions and dumber policy choices, rubber-stamped by mid-witted bureaucrats the world over. Which we'll dig into.

But it's fun to blame woke hippies. Which we'll also do, later.

X.com: Where AI Dreams and Nightmares Smash

A side note about X. If you're passionate about artificial intelligence like I am, X is the best place to be online. My feed there is a veritable firehose of what the crypto bros used to call alpha—pure and unadulterated information about the latest happenings in AI. Hot papers, state of the art techniques, backroom industry intrigues, and deep lessons from the best minds in the business.

Of course, because it’s X, it's also a toxic fire-swamp of misinformation, conspiratorial heebejeebery, erudite quackery, and antisocial nonsense.

For some reason, Elon Musk, genius industrialist, owner of X, and self-avowed autist, often fans the worst of the sexist, race-baiting flames, either because he believes them to be true or because he believes (with evidence) that unhinged fire-swampery attracts eyeballs and spikes user engagement.

(Full transparency: I'm writing this post for the same reason as Elon — you've read this far because you're already feeling pissed and partisan, and …ooooh look… there might be more delicious swamp bait below. I crave your sweet, angry eyeballs. Thanks for your attention. Please Like and Subscribe.)

The CrowdStrike Clusterfuck: A Technical Deep Dive

Let's set aside the jokes for a moment and dive into what actually happened with the CrowdStrike outage. This wasn't just a simple bug – it was a cascading failure that rippled through systems worldwide. Here's the breakdown:

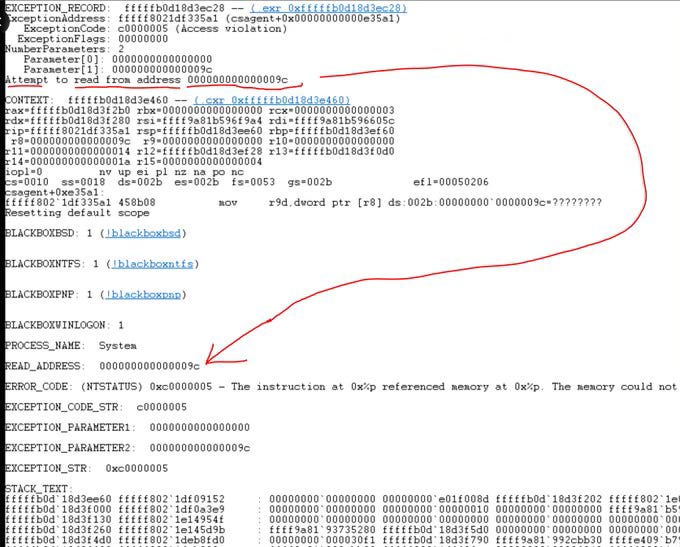

The Root Cause: NULL Pointer Dereference

At the heart of the issue was a NULL pointer dereference in CrowdStrike's C++ code. The program tried to access memory at an invalid address (specifically, address 0x9c or 156 in decimal), causing a system-wide crash. We’ll dig deeper into the nuts and bolts in a bit. But first:

Why It Was So Catastrophic

The problem occurred in a system driver, which has privileged access to the computer. Here's why this was particularly bad:

Kernel-Mode Driver: CrowdStrike's Falcon software operates as a kernel-mode driver, running with the highest privileges in the Windows operating system.

Blue Screen of Death: When a kernel-mode driver crashes, it typically causes a system-wide crash, resulting in the infamous "Blue Screen of Death" (BSOD).

Forced Reboot: A BSOD requires a system reboot to recover, which can lead to data loss and service interruptions.

The Spread of the Problem

The issue became widespread due to several factors:

Automatic Updates: CrowdStrike, like many security software providers, uses automatic updates to ensure systems are protected against the latest threats.

Rapid Deployment: The faulty update was pushed out to a large number of systems simultaneously.

Diverse Environments: The bug manifested across a wide range of Windows versions and hardware configurations, making it difficult to isolate and contain quickly.

Downstream Effects

The impact of this bug went far beyond individual computers:

Air Travel Disruption: Many airlines use CrowdStrike for cybersecurity. The outage caused flight delays and cancellations as airlines' check-in and operations systems went down.

Financial Sector Impacts: Banks and financial institutions experienced service interruptions, affecting everything from ATM operations to online banking services.

Healthcare System Strain: Hospitals and healthcare providers using affected systems faced challenges in accessing patient records and operating medical equipment.

Corporate Paralysis: Many businesses found their operations grinding to a halt as employee workstations and servers crashed.

Why Didn't Testing Catch This?

You might wonder how such a critical bug made it through testing. Several factors could contribute:

Environment Variability: The bug might only manifest under specific conditions that weren't replicated in testing environments.

Time Pressure: In the fast-paced world of cybersecurity, there's often pressure to release updates quickly to address new threats.

Complexity of Interactions: Modern software systems are incredibly complex, with many interdependencies that can be difficult to fully test.

These factors highlight the challenges in developing robust, error-free code, especially in languages like C++ that allow for low-level memory manipulation. Let's dive deeper into the technical aspects of what went wrong.

The Technical Nitty-Gritty

Zach Vorhies, a “professional C++ programmer”, provided a detailed analysis of the stack trace dump (On X, of course!) Here's what it reveals:

Memory Layout: In computer memory, which is essentially a large array of numbers, address 0x0 (NULL) is used to indicate "nothing here." Attempting to read from or near this address typically results in immediate program termination.

Pointer Arithmetic Gone Wrong: The specific address 0x9c suggests that the code attempted to access a member variable of an object through a NULL pointer. This is likely due to pointer arithmetic: NULL (0x0) + 0x9c = 0x9c.

Lack of Null Checks: This issue could have been prevented with proper null pointer checks in the code. For example:

if (obj != NULL) {

// Access obj members

} else {

// Handle null case

}Privileged Access: Because this occurred in a system driver with high privileges, the operating system had to immediately crash to prevent potential security breaches or data corruption.

This incident serves as a stark reminder of how a simple programming error in a critical system can have far-reaching consequences. It also highlights the ongoing challenges in software development and deployment, especially for systems that require both security and stability.

How Languages Like Rust Could Help

Modern programming languages like Rust offer several features that could prevent issues like this:

Memory Safety: Rust's ownership system and borrow checker prevent common memory-related errors at compile-time, including null pointer dereferences.

Option Type: Instead of nullable pointers, Rust uses an `Option<T>` type, forcing developers to explicitly handle both the "some" and "none" cases.

Pattern Matching: Rust's pattern matching encourages thorough handling of all possible states of an `Option<T>`, reducing the likelihood of overlooking edge cases.

Fearless Concurrency: Rust's ownership model also prevents data races, a common source of bugs in multi-threaded applications.

Zero-Cost Abstractions: Rust provides these safety features without significant runtime performance costs, making it suitable for system-level programming.

Here's how the problematic code might look in Rust:

fn process_data(obj: Option<&Object>) {

match obj {

Some(o) => {

// Safely access o's members

},

None => {

// Handle the case where obj is None

}

}

}This Rust code explicitly handles both the case where `obj` exists and where it doesn't, preventing the kind of error that caused the CrowdStrike outage.

While languages like Rust offer compelling safety features, transitioning large, established codebases to new languages is a significant challenge. This brings us to our next point: why do we stick with potentially dangerous legacy systems, and what can we do about it?

Could This Have Been Avoided? A Walk Through Tech History and Policy Parallels

To understand how we ended up here, we need to take a stroll down memory lane and examine how both technical and policy decisions evolve over time across the tech industry. Spoiler alert: It's not always pretty, and it's rarely the fault of just one player.

Dumb Technical Choices (Or: How We Learned to Stop Worrying and Love Legacy Code)

The Rise of C++: When C++ emerged in the 1980s, it was a game-changer. It offered object-oriented programming with the performance of C, making it ideal for system-level software. Companies across the tech industry adopted it for good reasons:

Performance: Critical for software that needs to run efficiently.

Low-level control: Necessary for interacting directly with hardware.

Wide adoption: Abundant libraries and a large talent pool.

Fast forward to today, and C++ is deeply entrenched in many systems across numerous companies. Replacing it is like trying to change the foundation of a skyscraper while people are still working inside.The Cost of Change: As systems grew more complex industry-wide, the cost of replacing C++ codebases skyrocketed. It's not just about rewriting code; it's about:

Retraining entire engineering teams across the sector

Ensuring compatibility with existing systems in diverse environments

Maintaining performance during and after transition

Managing the risk of introducing new bugs during rewrites

The Inertia of Success: Paradoxically, the very success of C++-based systems made them harder to replace across the industry. When products are making millions and protecting critical infrastructure, there's immense pressure to avoid rocking the boat.

This situation mirrors many government policies. Think of social security systems built on COBOL, or healthcare policies that made sense decades ago but are now outdated. The cost and risk of wholesale changes often lead to band-aid solutions rather than fundamental overhauls.

Dumber Policy Choices (Or: How to Make Bad Situations Worse)

The Tyranny of the Urgent: In both tech and government, there's often a focus on short-term fixes rather than long-term solutions. Across the tech industry, this manifests as:

Pushing rapid updates to address immediate security threats

Prioritizing new features over foundational improvements

Underinvesting in robust testing and rollback mechanisms

This mirrors government policies that focus on quick wins rather than addressing underlying issues. Think of economic stimulus packages that provide temporary relief but don't address structural economic problems.One-Size-Fits-All Solutions:Many tech companies adopt forced update policies, reminiscent of broad government mandates that don't account for diverse needs:

Ignoring the varying risk tolerances of different clients

Assuming all systems can handle the same update process

Overlooking the potential for cascading failures in interconnected systems

This is akin to nationwide education policies that don't account for local needs, or blanket regulations that might work for large corporations but stifle small businesses.The Illusion of Control: Both in tech and government, there's often an overestimation of how much control we have over complex systems:

Assuming that because an update worked in testing, it will work everywhere

Underestimating the potential for unforeseen interactions in diverse environments

Overlooking the human factor in how updates are implemented and managed

This mirrors the way some policies are implemented with insufficient consideration for real-world complexities, like drug policies that ignore socioeconomic factors or immigration policies that don't account for global economic forces.

The Midwit Bureaucrats (Or: How Good Intentions Pave the Road to Tech Hell)

The Curse of Expertise: In both tech companies and government agencies, deep expertise in one area can lead to blind spots:

Engineers focused on cutting-edge features might overlook basic safety checks

Managers with financial expertise might undervalue technical debt

Security experts might push for updates that compromise system stability

This is similar to policy experts who deeply understand their field but may miss how their policies interact with other sectors of society.Silo Mentality: Large organizations across the tech industry often suffer from poor communication between departments:

Security teams might not fully coordinate with operations teams

Customer support insights might not reach development teams

Risk assessment might be disconnected from product roadmaps

This echoes the way government departments often operate in isolation, leading to conflicting or redundant policies.The Accountability Paradox: In complex systems, it's often difficult to assign clear responsibility for failures:

Multiple teams contribute to each update, obscuring individual accountability

Pressure to meet deadlines can override cautious voices

Success has many parents, but failure is an orphan

This mirrors the difficulty in holding policymakers accountable for the long-term effects of their decisions, especially when those effects span multiple election cycles.

The Blame Game: Why It's Easier to Point Fingers Than Fix Systems

After dissecting the technical, policy, and bureaucratic failures that led to our current tech predicament, you might be wondering: "Why did so many people jump to blame 'DEI hires' for the CrowdStrike fiasco?" Well, buckle up, because we're about to take a trip through the murky waters of human psychology and societal scapegoating.

The Comfort of Simple Solutions

Let's face it: it's a lot easier to blame a single group or idea for complex problems than to grapple with systemic issues. It's the same reason why we love conspiracy theories – they offer simple explanations for a chaotic world. Blaming "woke culture" or DEI initiatives for tech failures is the cognitive equivalent of comfort food: it might make you feel better momentarily, but it's not solving the real problem.

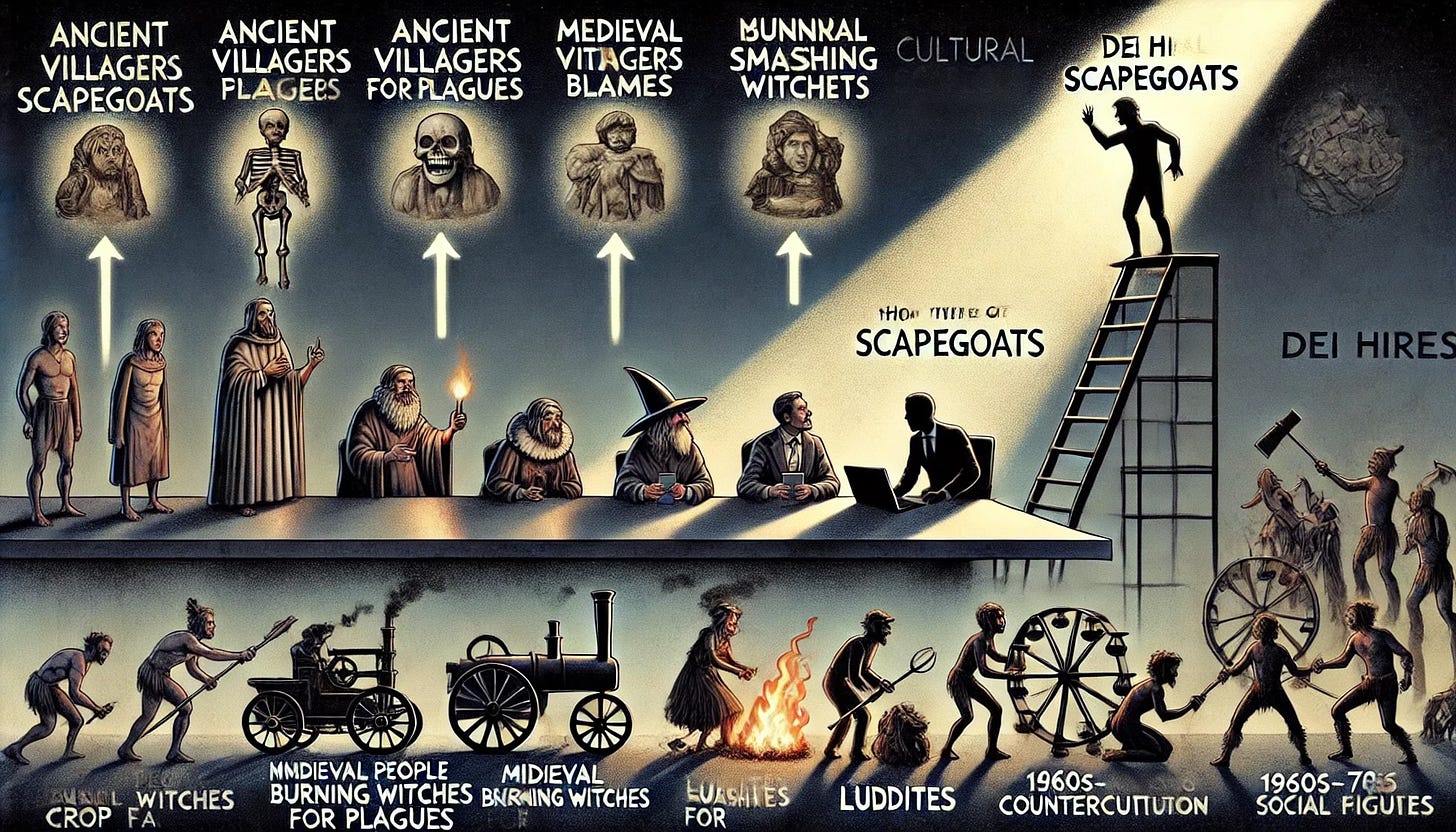

A Brief History of Scapegoating

Scapegoating is as old as human society itself. Let's take a quick (and admittedly oversimplified) tour:

Ancient Times: Got a plague? Blame the outsiders! Ancient societies often attributed natural disasters or diseases to divine punishment, usually for tolerating the 'wrong' people.

Medieval Europe: Crop failure? Must be witches! The witch hunts of the 15th-17th centuries are a prime example of society looking for easy targets to blame for complex problems.

Industrial Revolution: Machines taking jobs? Blame the Luddites! The original Luddites weren't against technology per se, but against unfair labor practices. Yet, they became synonymous with anti-progress sentiment.

1960s-70s: Social upheaval in the US? It's those darn hippies! The counterculture movement became an easy target for those uncomfortable with rapid social change.

Today: Tech problems? Must be those DEI hires!

See the pattern? When faced with complex, systemic issues, humans have a tendency to look for a convenient "other" to blame.

The DEI Scapegoat: A Sign of Our Times

So, why has DEI become the latest scapegoat, particularly in tech? Several factors are at play:

Rapid Social Change: Much like the 1960s, we're in a period of significant social transformation. DEI initiatives represent this change, making them a target for those resistant to it.

Visibility: As tech companies make public commitments to diversity, these efforts become highly visible targets.

Misunderstanding of Merit: There's a pervasive myth that DEI somehow contradicts meritocracy, ignoring the systemic barriers that have historically prevented truly merit-based hiring.

Political Polarization: In our current political climate, particularly with a U.S. election looming, DEI has become a political football, often divorced from its actual goals and implementation.

Tech Industry Growing Pains: As the tech industry grapples with its own systemic issues (remember our discussion on legacy code and outdated practices?), DEI becomes an easy distraction from deeper, more entrenched problems.

The Real Cost of Scapegoating

While blaming DEI or any other group might offer momentary satisfaction, it comes with real costs:

Missed Opportunities: By focusing on false culprits, we miss chances to address real, systemic issues in tech and society.

Talent Loss: Scapegoating can drive away talented individuals from underrepresented groups, exacerbating the very problems DEI aims to solve.

Innovation Stagnation: Diverse teams have been shown to be more innovative. By resisting DEI, tech companies might be shooting themselves in the foot.

Societal Division: Scapegoating deepens societal rifts, making it harder to collaborate on solving complex problems.

Breaking the Cycle

So, how do we move past this unproductive blame game? A few suggestions:

Embrace Complexity: Recognize that most significant problems, in tech and society, are multifaceted and resist simple explanations or solutions.

Promote Tech Literacy: The more people understand how technology actually works, the less likely they are to fall for oversimplified explanations of tech failures.

Advocate for Transparency: Push for clear, honest communication from tech companies about their successes, failures, and ongoing challenges.

Support Genuine DEI Efforts: Understand that true DEI initiatives are about expanding the talent pool and bringing diverse perspectives to problem-solving, not about lowering standards.

Practice Critical Thinking: Whether it's a viral tweet blaming DEI for a tech outage or a politician scapegoating a group for societal problems, take a moment to question and verify before jumping on the blame bandwagon.

Remember, the next time you're tempted to blame a complex tech disaster (or any complex problem) on a convenient scapegoat, take a deep breath and ask yourself: "Is this really the root cause, or am I falling for an age-old human tendency to oversimplify?" Chances are, the real answer is far more complex – and far more interesting – than any scapegoat theory.

In tech, as in society, progress comes not from finding perfect villains to blame, but from acknowledging our collective challenges and working together to address them. So let's roll up our sleeves and tackle the real issues – faulty update mechanisms, outdated systems, and yes, the need for truly inclusive and diverse teams to help us solve these complex problems.

The CrowdStrike outage serves as a stark reminder of the interconnectedness of our digital world and the far-reaching consequences of even small technical failures. But more than that, it highlights the dangers of oversimplification and scapegoating in an industry that thrives on complexity and innovation.

As we move forward, let's commit to:

Embracing Technical Excellence: Push for better coding practices, more robust testing, and smarter update mechanisms. The next generation of tech shouldn't be built on the shaky foundations of the past.

Fostering Inclusive Innovation: Recognize that diversity in tech isn't just about meeting quotas – it's about bringing together a wealth of perspectives to solve the intricate problems of our digital age.

Promoting Tech Literacy: In a world increasingly driven by technology, understanding the basics of how our digital systems work is as crucial as any other form of literacy.

Encouraging Systemic Thinking: Whether you're a coder, a manager, or a user, try to see beyond the immediate problem to the larger systems at play. It's in understanding these complex interactions that we'll find lasting solutions.

Maintaining a Sense of Humor: Let's face it, in the fast-paced world of tech, things will go wrong. Sometimes spectacularly so. Keeping our sense of humor intact (while still taking our responsibilities seriously) can help us navigate the inevitable bumps in the road.

After all, in the face of global tech outages or societal challenges, we're all in this together. And together, with clear eyes, open minds, and maybe a bit of that irreverent tech humor, is how we'll find our way forward.

So the next time you hear someone trying to pin a complex tech failure on a simplistic cause – be it DEI initiatives, woke culture, or the phase of the moon – maybe gently remind them (and yourself) that the real world of technology is far more intricate, challenging, and yes, interesting than any scapegoat theory could ever be.

Now, if you'll excuse me, I need to go check if my toaster is spying on me for the Deep State. In this industry, you can never be too careful – or too serious. Keep coding, keep questioning, and for the love of all that's holy in Silicon Valley, remember to check your pointers!