Introducing RL Security Verifiers

Teaching AI to Think Like a Security Expert | Reinforcement Learning for Cybersecurity

How do we ensure AI systems can protect us from cyber threats without becoming threats themselves? I’ve launched and open-sourced a new project called Security Verifiers that starts to tackle this challenge through new training environments on Prime Intellect’s new Environments Hub.

The Problem: AI Needs Better Security Training

Imagine training a new security analyst. You wouldn’t simply show them examples of attacks and hope they figure it out. You’d give them real scenarios, let them use actual tools, and verify they made the right decisions for the right reasons.

Current AI training falls short of this standard. Most models learn from static text examples without hands-on practice with security challenges. They can’t test their assumptions, use security tools, or learn from verified outcomes. Picture training a pilot using only textbooks - they’d have theoretical knowledge but lack the judgment that comes from actual flight time.

The stakes have never been higher. In July 2025, Carnegie Mellon researchers made a startling discovery: AI systems can now autonomously plan and execute sophisticated cyberattacks. In their tests, they successfully recreated the infamous 2017 Equifax data breach - the one that compromised 147 million people’s data - entirely without human help. The AI succeeded in 9 out of 10 test scenarios.

Why This Matters Now

AI integration into digital infrastructure continues to accelerate - from fraud detection to network protection to code generation. The latest data paints a concerning picture: AI-driven attacks have surged nearly 600% in 2025, with most financial services companies reporting they’ve been hit by AI-related security incidents.

We need AI systems with specific capabilities:

Detection accuracy with manageable false positive rates - Security teams already struggle with alert fatigue

Vulnerability remediation that maintains system functionality - A fix that breaks production is worse than no fix

Resistance to adversarial manipulation - Attackers actively try to fool AI defenses

Calibrated confidence levels - Knowing when to escalate to human analysts

The rise in AI-powered cyberattacks adds urgency. One study found enterprises discovering over 500 unauthorized AI tools being used across their networks - employees unknowingly creating security holes by using consumer AI services for work tasks. Both defenders and attackers now leverage AI capabilities. Training defensive AI on textbook examples while attackers use sophisticated techniques creates a dangerous asymmetry.

Perhaps most concerning: Microsoft recently patched a critical vulnerability in its Copilot AI assistant called “EchoLeak.” Attackers could steal sensitive company data just by sending a specially crafted email - no clicking required. It marked the first “zero-click” attack on an AI assistant, showing how AI itself can become the vulnerability.

Understanding Reinforcement Learning: The Core Technology

Before diving into Security Verifiers, let’s understand the key technology behind it: reinforcement learning (RL). Unlike traditional AI training where models learn from labeled examples (“this email is spam, that one isn’t”), RL works through trial and error with rewards and penalties.

Think of how you learned to ride a bike. Nobody gave you thousands of labeled examples of “correct pedaling” versus “incorrect pedaling.” Instead, you tried different approaches, fell occasionally, and gradually learned what worked through direct feedback from reality. When you stayed upright, that was your reward. When you fell, that was the penalty. Your brain adjusted based on these outcomes.

Reinforcement learning gives AI systems this same capability. The AI tries different approaches to security tasks, receives rewards for successful outcomes (catching real threats, fixing actual vulnerabilities), and penalties for failures (missing attacks, breaking systems). Over thousands of attempts, it develops sophisticated strategies based on what actually works, rather than what humans think should work.

Recent research shows this approach works: AI systems trained with RL are successfully detecting brand-new types of attacks they’ve never seen before, adapting in real-time as threats evolve.

The Solution: Security Verifiers

Security Verifiers is an experimental project exploring whether we can create realistic training environments where AI systems develop security skills through practice and verification. Think of it as an attempt to build a flight simulator for cybersecurity AI - a safe space to learn from both successes and failures.

The project aims to introduce several key innovations:

1. Real Tools, Real Verification

The goal is for AI to go beyond guessing whether an email contains phishing. In the envisioned system, it would check URLs against threat intelligence databases, verify domain registration dates, analyze sender authentication records (SPF, DKIM), and use the same tools human analysts employ. The crucial difference: we would verify outcomes based on actual results, not human opinions that might be inconsistent or incorrect.

This verification would happen programmatically. For code vulnerabilities, we’d run actual exploit attempts against the code. For configuration audits, we’d use policy engines that mathematically prove whether a configuration meets security requirements. The AI would learn from ground truth, not subjective judgments.

2. Calibration: Teaching Appropriate Uncertainty

In security, overconfidence kills. A system that claims 100% certainty about a sophisticated attack when it should only be 60% confident creates dangerous blind spots. Security Verifiers introduces calibration rewards - the AI gains points for accurate confidence levels, not just correct answers.

Here’s how it works: If the AI says it’s 90% confident something is malicious, it should be right about 90% of the time across many similar cases. If it’s actually only right 60% of the time, it receives penalties for overconfidence. This trains the system to express uncertainty appropriately - crucial when deciding whether to block traffic, quarantine files, or escalate to human analysts.

3. Asymmetric Costs: Reflecting Operational Reality

Missing a real attack carries different consequences than a false alarm. But excessive false alarms create their own problem - alert fatigue that causes teams to ignore warnings. Security Verifiers incorporates these trade-offs directly into the training.

The reward structure might penalize a missed attack 10 times more heavily than a false positive. But it also includes diminishing rewards if the false positive rate exceeds operational thresholds. The AI learns to navigate this balance, developing strategies that catch real threats while maintaining usable alert volumes.

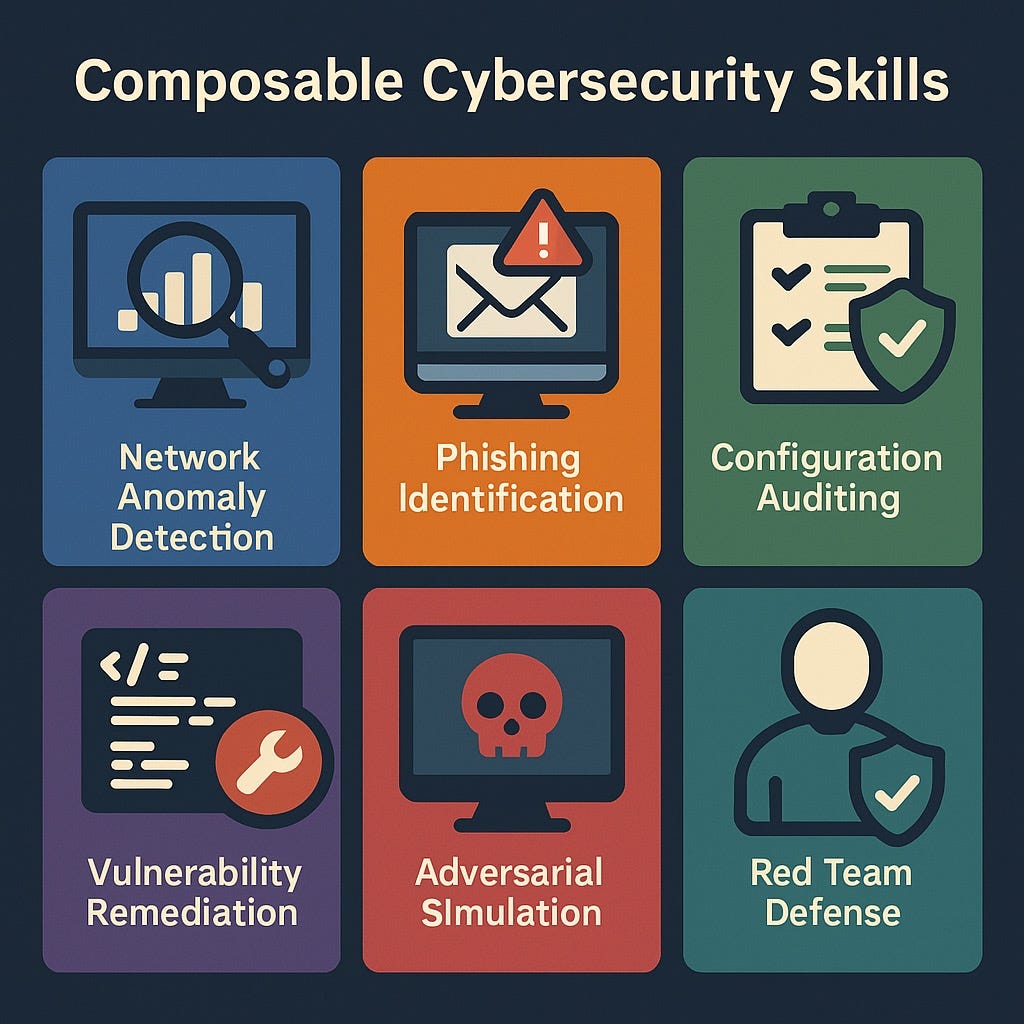

4. Composable Skills Through Six Environments

The project plans to develop six interconnected training environments, each targeting specific capabilities:

Network Anomaly Detection: The AI analyzes network traffic patterns, learning to distinguish between unusual-but-benign behavior (like a new software deployment) and actual attacks (like data exfiltration). It learns concepts like baseline behavior, temporal patterns, and protocol anomalies.

Phishing Email Detection: Beyond simple keyword matching, the AI learns to verify sender authenticity, check URL reputation, and identify social engineering tactics. It can even learn when to request additional information before making a determination.

Security Configuration Auditing: The AI reviews infrastructure-as-code (Kubernetes manifests, Terraform configurations) to identify misconfigurations. It learns to use policy-as-code tools like Open Policy Agent to mathematically verify compliance with security standards.

Vulnerability Repair: Given vulnerable code and failing security tests, the AI must produce minimal patches that fix the vulnerability without breaking functionality. It learns concepts like input validation, secure defaults, and the principle of least privilege.

Red Team Attack Simulation: The AI learns to think like an attacker, finding ways to bypass defenses or elicit unsafe behavior from other AI systems. This adversarial training helps identify weaknesses before real attackers do.

Defensive Alignment: The AI learns to maintain helpful behavior while resisting manipulation attempts. It practices handling edge cases where being helpful might mean enabling harmful actions.

Each environment shares common infrastructure and evaluation methods, allowing skills to transfer. An AI that understands how attackers probe for vulnerabilities in one context can apply that knowledge to detect similar probing in network traffic.

The Technical Innovation: Executable Verification

The core hypothesis of Security Verifiers is that we can replace subjective training data with executable verification. Traditional AI training relies on human-labeled data: “This email is phishing because an expert said so.” This project explores whether we can instead use executable verification: “This email is phishing because clicking its link leads to a credential harvesting site that we can programmatically verify.”

If successful, this shift could have profound implications. Human labelers make mistakes, have biases, and disagree with each other. Executable verification would provide consistent, reproducible ground truth. When the AI fixes a code vulnerability, we wouldn’t ask humans if the fix looks good - we’d run the exploit attempt and verify it fails. When the AI identifies a misconfiguration, we’d use automated policy engines to prove the configuration violates security requirements.

Why Open Source Matters

Making this system open source through Prime Intellect’s Environments Hub democratizes the development of sophisticated security AI training. Any researcher, company, or security team can:

Train AI systems on production-grade security challenges

Verify that AI systems actually understand security principles

Contribute new environments as threats evolve

Ensure security AI capabilities remain accessible to all defenders

Small companies face the same threats as tech giants but lack resources to build custom AI training infrastructure. Open source Security Verifiers levels this playing field.

Current Progress and Future Development

The network anomaly detection environment is operational as a proof-of-concept, with initial testing underway through Prime Intellect’s platform. The remaining five environments are in various stages of design and development, each targeting different security challenges identified through industry experience.

The broader ecosystem is evolving rapidly. Multiple research teams are now using reinforcement learning for cybersecurity - from automated penetration testing to real-time defense systems. These parallel efforts validate our approach while creating opportunities for collaboration.

The project’s experimental roadmap includes exploring:

How to expand datasets to cover emerging threat patterns

Whether more sophisticated tool integrations improve learning

If skills can genuinely transfer between different security environments

Ways to create meaningful leaderboards that track actual security capability rather than gaming metrics

The Broader Impact

Security Verifiers addresses a fundamental challenge in AI development: ensuring AI systems can handle critical tasks reliably. When an AI system protects your company’s network, reviews code for vulnerabilities, or makes security decisions, you need confidence in its training methodology.

As Carnegie Mellon researcher Brian Singer notes: “Right now, only big companies can afford to run professional tests on their networks via expensive human red teams, and they might only do that once or twice a year. In the future, AI could run those tests constantly, catching problems before real attackers do. That could level the playing field for smaller organizations.”

This project provides that confidence through verifiable, reproducible training on real security challenges. As AI becomes more central to cybersecurity, the quality of its training determines whether it becomes our strongest defense or weakest link.

Building on Open Infrastructure

Security Verifiers leverages two key open-source tools that are making this kind of experimentation possible:

The Verifiers Library: Created by Will Brown at Prime Intellect, this provides modular building blocks for creating RL environments - standardized components that handle the technical complexity of multi-turn interactions, tool use, and reward calculation. The library emphasizes *verifiable* environments where outcomes can be programmatically checked rather than relying on subjective human feedback.

The Environments Hub: Launched in August 2025 by Prime Intellect, this platform addresses a critical gap - major AI labs create proprietary training environments and keep them closed, making it difficult for smaller organizations to compete. The Hub provides an open alternative where researchers can share and reuse training environments. Within its first week, over 100 environments were contributed by the community.

These tools lower the barriers to RL research by handling infrastructure complexity and enabling knowledge sharing. For Security Verifiers, this means we can focus on defining security challenges rather than rebuilding training infrastructure, and any improvements can benefit the broader community.

Getting Involved

This project is an early-stage experiment that welcomes contributions and feedback from diverse perspectives. Security professionals can help define realistic threat scenarios and verification methods. AI researchers can suggest improvements to training approaches. Software engineers can contribute to the infrastructure and tooling.

You don’t need expertise in both security and AI to participate. The modular design allows security experts to define challenges without understanding RL, while AI researchers can improve training methods without deep security knowledge.

The urgency is clear. We’re entering an era where cyber defenses that rely on human operators may not be able to keep up with machine-speed attacks. We need to explore defenses that can operate at the same pace.

The experimental code is available at github.com/intertwine/security-verifiers, and the proof-of-concept network anomaly environment can be tested at Prime Intellect’s Environments Hub. This is very much a work in progress - feedback, critiques, and alternative approaches are all welcome.

Bryan Young is a Principal Software Engineer at Expel Cybersecurity and the creator of Security Verifiers. With over 20 years of experience at the intersection of AI and critical systems, he’s working to ensure AI enhances our digital security infrastructure, safety, and alignment.

Sources & Links

“When LLMs autonomously attack” - Carnegie Mellon University Engineering News (July 2025)

“Research shows LLMs can conduct sophisticated attacks without humans” - Cybersecurity Dive (July 2025)

“AI Cybersecurity Threats 2025: How Artificial Intelligence Became the Biggest Security Challenge” - Axis Intelligence (2025)

“Zero-Click AI Vulnerability Exposes Microsoft 365 Copilot Data Without User Interaction” - Hacker News (June 2025

“EchoLeak (CVE-2025-32711) Show us That AI Security is Challenging” - Checkmarx (July 2025)

“Multi-Agent Reinforcement Learning for Cybersecurity: Classification and survey” - ScienceDirect (February 2025

“Cyber security Enhancements with reinforcement learning: A zero-day vulnerability identification perspective” - PLoS One (May 2025)

“Deep Reinforcement Learning for Adaptive Cyber Defense in Network Security” - ACM Digital Library (2025)

“Awesome RL for Cybersecurity” - GitHub

“Reinforcement Learning for feedback-enabled cyber resilience” - ScienceDirect (January 2022)