OpenAI o1: Waiting for Strawberry in the Veneto

OpenAI Releases o1 | Recapping the Strawberry Saga | AI Hype vs. Reality

Saturdays are market days in Caprino Veronese. Held for generations in the stone-cobbled heart of this sleepy Italian town, the stall-keepers’ bazaar is a short drive down grape-lined hills from the 15th-century farmhouse where I'd spend my first days of August vacation. Amid stands overflowing with warm pecorino, plump figs, and melt-in-your-mouth prosciutto, I paused to taste the strawberries from Verona.

Juicier than American berries, they caught my eye not just for their luster, but as timely reminders of home—where "Strawberry" had become the code name for a much-hyped, as-of-yet-unrevealed AI breakthrough from OpenAI. Tasting the fruit, I found myself drawn back into the newly-unfolding saga playing out on X—an irresistible lure for a terminally-online AI-hype junkie like me. Even here, in this timeless corner of the Italian Veneto, ceaseless anticipation of The Next Big Thing in AI found ways to pull me back in.

Listen to the AI-generated “O1 Deep Dive Podcast” based on this article. Created autonomously with NotebookLM from Google Gemini (WARNING: Hallucinations!)

Hype Machine in Overdrive

It had all started with a series of cryptic tweets from an account named @iruletheworldmo. In the ever-vigilant AI community, these enigmatic messages sparked a wildfire of speculation. Was this an OpenAI insider? A clever marketing ploy? Or just an exceptionally well-informed enthusiast?

The intrigue deepened when Sam Altman, CEO of OpenAI, responded to one of these tweets with a tantalizing "amazing tbh,” and then posted his own photo of strawberries. In the world of tech, where every utterance from industry leaders is scrutinized like ancient runes, this brief interaction was akin to throwing gasoline on an already roaring fire.

Altman’s post was viewed almost 7 million times. Forums exploded with theories. Discord servers hummed with activity. The term "Project Strawberry" began trending, with thousands speculating on what revolutionary advancement OpenAI might be on the cusp of revealing. Some claimed it would be a quantum leap in language understanding, others bet on unprecedented reasoning capabilities. The more outlandish predictions spoke of artificial general intelligence (AGI) finally within reach.

August Anticipation, September Reality

As I savored those August strawberries in the Veneto, Beckett's "Waiting for Godot" came to mind. The AI community's wait would stretch beyond my vacation, mirroring the play's endless anticipation.

"We are waiting for Godot to come," Beckett's character says, echoing our month-long vigil for "Project Strawberry." Through August, Internet sleuths dissected every random tweet and comment, certain that each day might bring the revelation.

But like Godot, our anticipated AI breakthrough didn't arrive on cue. As August faded into September, the wait continued, proving that in tech, as in theater, timing is everything—and rarely predictable.

Now, in mid-September, OpenAI has finally unveiled the o1 model. Our "Godot" has arrived, weeks after the initial frenzy. The question remains: After all this anticipation, will o1 live up to the summer's fevered expectations?

OpenAI's o1: A New Chapter in AI Reasoning

OpenAI's mid-September unveiling of the o1 model marked a significant moment in the AI landscape, generating both excitement and skepticism among experts and users. This new model represents a shift in focus towards enhanced reasoning capabilities, particularly excelling in complex tasks involving science, mathematics, and coding.

Olympic Level Reasoning

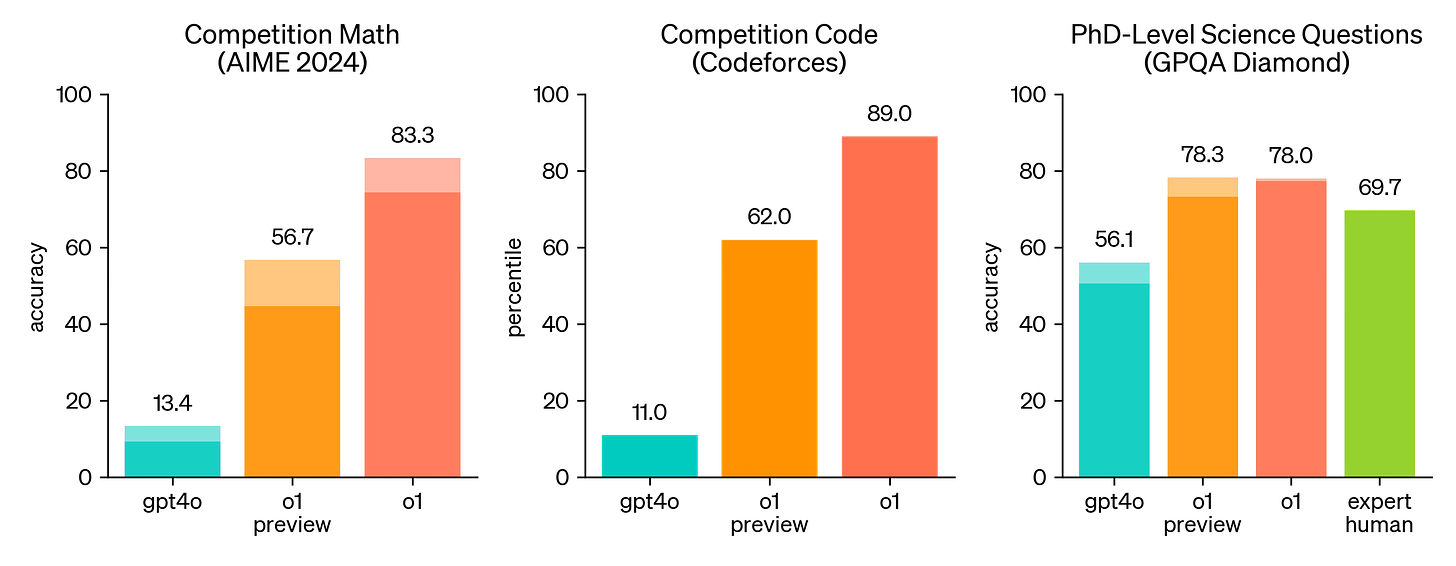

o1's standout feature is its chain-of-thought reasoning approach, which mimics human problem-solving strategies. The model "thinks" before responding, planning ahead and synthesizing results from multiple subtasks. This approach has led to impressive achievements, such as scoring 83% accuracy on International Mathematics Olympiad qualifying exams—a substantial leap from its predecessor GPT-4o's 13%. In programming competitions, o1 ranked in the 89th percentile, showcasing its prowess in tackling intricate coding challenges.

Slower and More Expensive (for now…)

However, o1's advancements come with notable trade-offs. The model is significantly slower and more expensive to use than its predecessors, lacking features like web browsing and image processing that users had come to expect. It still grapples with simpler tasks and factual knowledge about the world, and while potentially reduced, issues with hallucinations persist.

Better at Deception

Safety and ethical considerations remain at the forefront of discussions surrounding o1. OpenAI claims improved safety measures, with the model scoring higher on jailbreaking tests compared to previous versions. Yet, some experts warn that o1's enhanced reasoning capabilities, particularly in areas like deception, could pose new risks, reigniting debates about AI safety and regulation.

A Mixed Early Response

The tech community's response to o1 has been mixed. While some view it as an impressive leap forward in AI reasoning, others see it as incremental progress with significant practical limitations. Many experts emphasize the need for further testing and evaluation to fully understand o1's capabilities and implications.

Looking ahead, o1 could have far-reaching impacts across various fields. In healthcare, it might assist with complex diagnoses and treatment planning. In scientific research, it could help analyze intricate datasets and design experiments. However, realizing these potential benefits will require careful implementation and ongoing ethical considerations.

As the dust settles on the o1 launch, it's clear that while the model represents a significant step forward in certain areas of AI reasoning, it also introduces new challenges and questions. The coming months will likely see intense scrutiny and evaluation as the AI community grapples with the implications of this new technology and its place in the evolving landscape of artificial intelligence.

Hype vs. Reality: The o1 Saga

As the Veronese strawberries of August gave way to September's harvest, OpenAI's o1 model finally emerged from the mists of speculation. Like Beckett's Godot, the arrival was both anticipated and unexpected, leaving us to ponder the nature of progress in the world of AI.

Anticipation vs. Reality

The fevered speculation of August painted "Project Strawberry" as a potential paradigm shift in AI capabilities. Whispers of AGI and unprecedented breakthroughs filled forums and social media. The reality of o1, while impressive in many ways, tells a more nuanced story:

Reasoning Prowess: The hype promised revolutionary advances, and o1 delivered significant improvements in complex reasoning tasks, particularly in STEM fields. Its performance on mathematical and coding challenges exceeded expectations.

Broad Capabilities: Speculation hinted at a jack-of-all-trades AI. In reality, o1 excels in specific areas but struggles with simpler tasks and broad world knowledge, highlighting the trade-offs in AI development.

Speed and Accessibility: The anticipation overlooked practical considerations. o1's slower processing and higher costs were unexpected hurdles, tempering excitement about its real-world applications.

Safety and Ethics: While improved safety measures were implemented, the enhanced reasoning capabilities have reignited concerns about AI risks, a aspect not fully anticipated in the pre-launch excitement.

Conclusion: The Bittersweet Taste of Progress, with a Promise of More to Come

As we reflect on the journey from August's anticipation to September's reality, the o1 saga offers valuable lessons about AI development and our relationship with technological progress:

The Nature of Innovation: While o1 didn't fully live up to the fevered speculation, it represents a significant leap forward in AI reasoning capabilities. By restarting their model numbering from 1, OpenAI signals a new chapter in their approach to AI development – one that builds upon their GPT series but charts a distinct path forward.

The Danger of Hype: The AI community's fervent speculation, while exciting, set unrealistic expectations. Yet, beneath the hype, o1 delivers substantial improvements in complex reasoning tasks, reminding us that progress, even when it doesn't meet our wildest dreams, can still be meaningful.

Balancing Optimism and Caution: While o1's advancements are cause for excitement, the ethical concerns raised by experts underscore the need for responsible development and deployment of AI technologies.

The Value of Patience and Persistence: Just as the Veronese strawberries gained their sweetness through patient cultivation, o1's development required time and careful refinement. More importantly, OpenAI's approach suggests that the best is yet to come.

A New Paradigm in AI Scaling: Perhaps o1's most revolutionary aspect is its potential for inference-time scaling. Unlike previous models constrained primarily by training-time scaling, o1 can improve its performance with additional compute time during use. This opens up new avenues for AI advancement, potentially allowing for rapid improvements without the need for complete retraining.

As we look to the future, the o1 release reminds us that the path of AI development, like Beckett's winding road, is full of unexpected turns. It challenges us to temper our expectations with realism, to approach each new development with both optimism and critical thinking. Yet, it also gives us reason for genuine excitement about the pace of progress.

OpenAI's decision to start anew with o1 suggests a confidence in this model's architecture as a foundation for future breakthroughs. The ability to scale at inference time could lead to a more dynamic and rapidly evolving AI landscape, where improvements happen not just with each new model release, but potentially in real-time as the model is used.

In the end, the true measure of progress in AI won't be found in any single model or breakthrough, but in how these technologies ultimately enhance human capabilities and improve lives. As we continue to navigate this rapidly evolving landscape, let us carry forward the lessons learned from the o1 saga – balancing anticipation with patience, excitement with thoughtful consideration, and always striving to align our technological advancements with our deepest human values.

The journey of AI, much like savoring a perfect strawberry, is as much about the experience and the lessons learned along the way as it is about reaching a final destination. With o1, we've tasted the first fruits of a new approach to AI. As we move forward, let's approach each new development not just with anticipation, but with wisdom gained from every step of the journey, ready for the surprises and delights that await us in this ever-ripening field.